On Communicating High-Dimensional Thought

Abstract

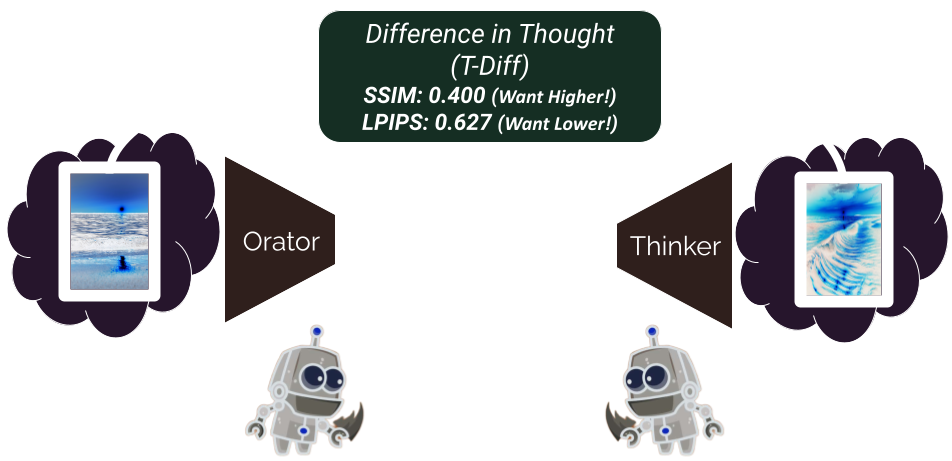

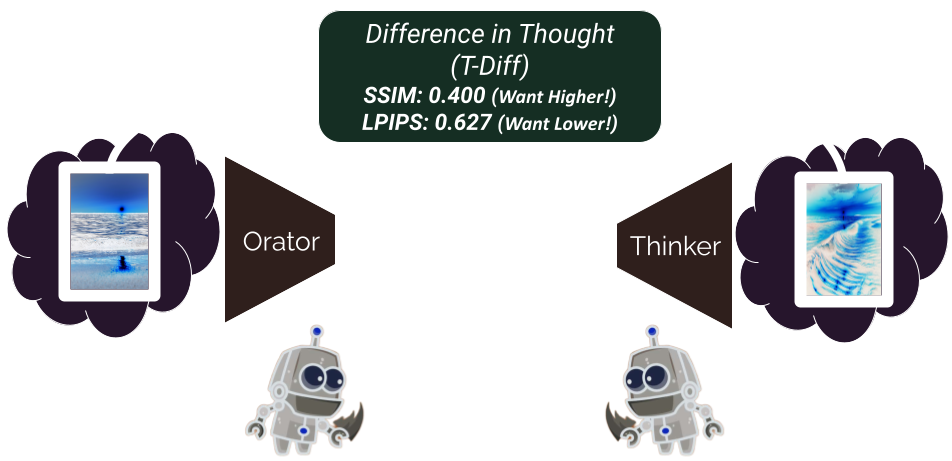

This article presents a framework for the harmonious integration of an Artificial Intelligence into human society. The key principle driving our framework is effective communication. As part of this, we discover and discuss the lower-dimensional or communicative representation of higher-dimensional thoughts or hallucinations spawning from the sampling of fluidly modal datapoints in memory. In our novel Orator-Thinker setup, we describe both communicative behavior as well as the hallucinations in detail while providing a multi-agent framework for AI to grow as a 'species', evolving to become a part of society. Our key contribution is in introducing the principle of cross-dimensional thought transfer to evaluate systems that claim to exhibit Artificial General Intelligence or AGI.

Introduction

A commonly cited reason for the evolution and advancement of humans as a species is our ability to communicate effectively using language [1,2,4]. Humans use language in various ways, with words describing objects, events, situations, feelings, and many more environmental phenomena. It can be argued that the complexity of the language being used translates to the intelligence of a society [5, 7].

Words or phrases of a particular language are used to describe such phenomena, enabling the derivation or extraction of knowledge. For instance, the phrase “The sunset was beautiful!”, could be comprehended by anyone sufficiently competent in the English language, causing them to “hallucinate” or render a mental image of the sun setting in their head, in their own style derived from their own personal preferences of what constitutes beauty. These hallucinated thoughts however need not be an accurate representation of what the original speaker might have wanted to convey.

Humans as a species evolved as a result of such communications, which were crucial for conveying various environmental phenomena [3]. Immanent dangers could be described by creating words for conveying what had been experienced. For instance, the statement “A bear is nearby” would require people to have a consensus of what the words bear and nearby mean, to collectively grasp the phenomena, and take appropriate safety measures. This would inturn encourage humans to learn some form of language to communicate.

In fact, some [8] argue that language requires "experience" to be grounded. Which means that at some point in our evolution, there lived cavemen that did not understand what "bear" meant due to a lack of perceptory experience, and were eaten up as a result.

While words themselves do not need to be linguistically complex in terms of their interrelational structure, the mere existence of a word to describe a phenomenon greatly increases the coverage of a conversation, significantly impacting collective intelligence. In a globalized society, the interchange of information from one language to another has enabled us to survive dangers that one species might have faced and the other might not have.

Such communications often involve instilling some notion of mental imagery of what has been physically experienced, using objects occuring in the vicinity of both humans in conjunction with previously established or commonsense concepts. We argue that such level of communication is crucial for an AI agent to replicate human-like behavior, as it may need to ground alien concepts (with or within the expanse of total human intelligence) to a human user.

For purposes of experimentation, we consider a two-agent framework:

References